Managing Inter-Task Data Transfer in Airflow

Building data pipelines often involves sharing data between tasks within Airflow DAGs. This need for efficient data transfer becomes even more critical as pipelines grow in complexity.

Let's paint a scenario:

In a retail company's ETL pipeline for customer analytics, Apache Airflow orchestrates a series of tasks to extract, transform, analyze, and present customer data. Initially, raw data is extracted from multiple sources like website logs and transaction databases. This data is then cleaned and preprocessed before being analyzed to generate insights on customer behavior and preferences.

Inter-task data transfer ensures seamless flow between tasks, promoting data consistency and workflow efficiency. Ultimately, Apache Airflow enables the retail company to make informed decisions based on data-driven insights, improving marketing strategies and product offerings for enhanced customer satisfaction and business growth.

Let's hypothesis a few scenarios:

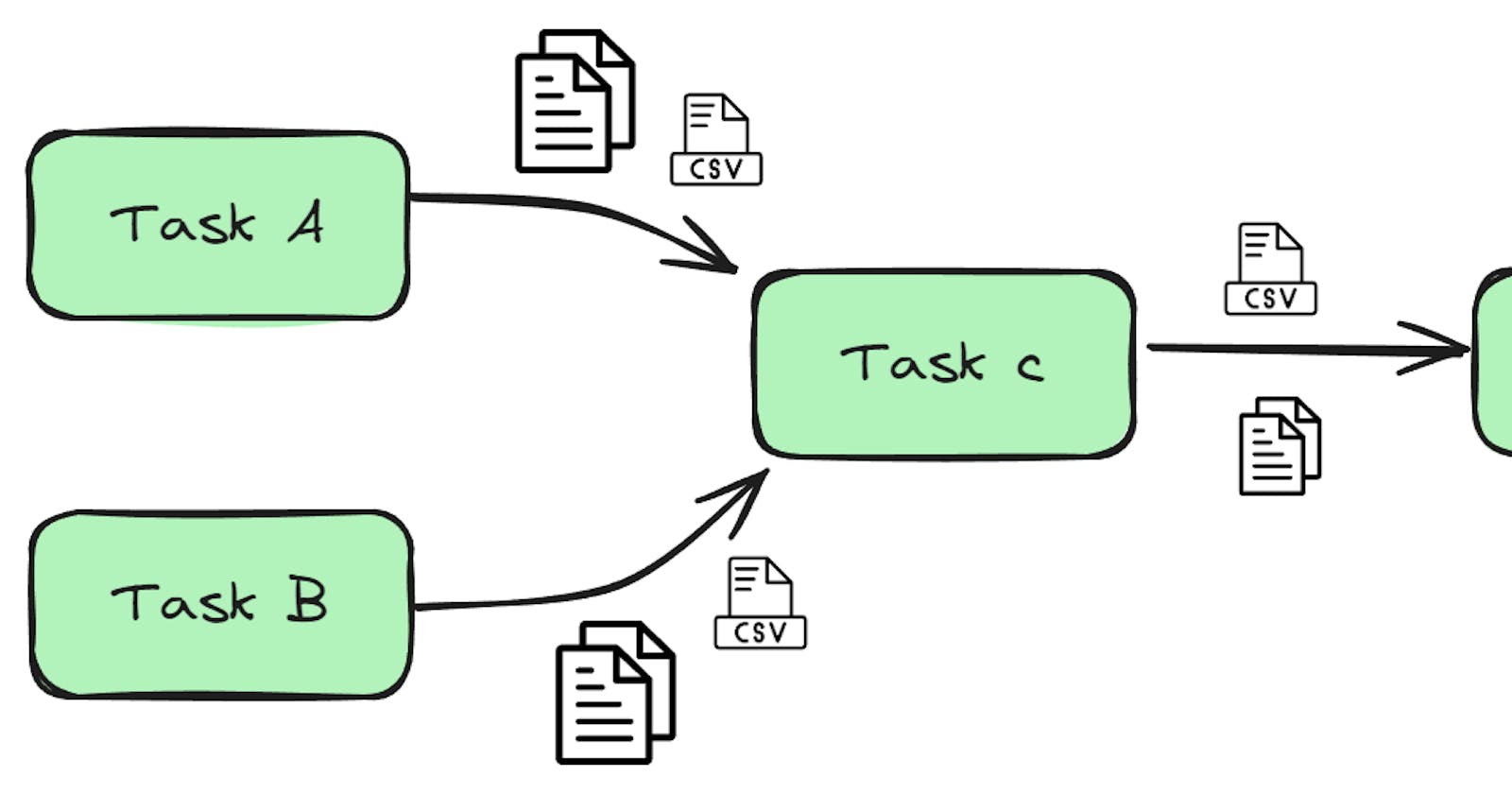

- Modular Workflows:

Break down complex data pipelines into smaller, reusable DAGs.

Each DAG focuses on a specific stage of processing.

Data Transfer: Such a case could be model training. Whereby one dag fetches the data. The output from this dag is passed onto another DAG(Preprocessing dag) to handle the preprocessing before it's passed on to another dag(model training dag) for further processing.

- Conditional Branching:

Make decisions based on results from an upstream DAG.

Depending on the outcome (success, failure, specific data values), trigger a specific downstream DAG.

Data Transfer: Imagine a stock price anomaly detection pipeline. One DAG analyzes prices, and based on the outcome (anomaly detected or not), it triggers either an alerting DAG or a standard processing DAG.

- Data Validation Across Pipelines:

Validate data quality across multiple Airflow workflows.

A dedicated DAG might analyze data quality metrics calculated by other DAGs.

Data Transfer: Imagine separate DAGs for customer data processing and order fulfillment. The processing DAG calculates error rates, which are then transferred (e.g., number of invalid addresses) to a validation DAG for comprehensive quality checks.

- Master-Slave Orchestration:

Organize complex pipelines with a master DAG coordinating child DAGs.

The master DAG triggers child DAGs sequentially, potentially providing configuration data.

Data Transfer: Imagine a complex e-commerce data pipeline. A master DAG might first trigger a child DAG to fetch new customer orders. This data is then transferred (e.g., order IDs) to a separate child DAG for processing payments. This master-slave approach with data transfer keeps the master DAG focused on orchestration, while child DAGs handle specific tasks like order fetching and payment processing.

While these scenarios underscore the importance of inter-task data transfer, challenges arise in determining suitable tools based on data type and size.

We'll explore three approaches to data transfer to understand their effectiveness across different scenarios.

XComs

An alias for “cross-communication”, this is Airflow's in-built mechanism for inter-task data transfer.They are principally defined by a key, value, and timestamp. Xcoms can either be pushed, an alias to sent or pulled, meaning received.

XComPush

XComPush in Airflow acts as a messenger for small data exchanges between tasks. Here's a breakdown of its functionality:

Stores Data: XComPush allows tasks to push small data pieces (flags, summaries, etc.) into Airflow's XCom storage. This data serves as a message or signal for downstream tasks.

Serialization and Metadata: Before storing the data, XComPush serializes it, converting the provided data (e.g., strings, numbers, lists) into a format suitable for storage. Additionally, metadata such as the task ID and execution date are attached to provide context to downstream tasks.

XComPull

On the receiving end, XComPull allows tasks to retrieve the messages stored by upstream tasks. Its functionality includes:

Data Retrieval: XComPull enables tasks to retrieve previously stored data using XComPush within the same DAG run.

- Deserialization and Filtering: Upon retrieval, XComPull deserializes the data, converting it back into its original form for the receiving task's use. Additionally, it allows for optional filtering based on task ID and key, ensuring the task receives only the relevant information.

Data shared using XCom is stored in the Airflow metadata database. You can easily find the configuration for this database connection in the docker-compose.yaml file if you're using a Dockerized Airflow deployment. However, relying solely on XCom for data transfer within Airflow workflows comes with a few limitations:

XCom's Limitations:

1. Data Size Matters:

Designed for Small Data: XCom is primarily intended for transferring small amounts of data like flags, configuration values, or data summaries.

Performance Impact: Pushing or pulling large datasets (e.g., video files, sensor readings) through XCom can significantly slow down your Airflow workflows due to serialization and storage overhead.

2. Limited Data Types:

Basic Types Only: XCom can handle fundamental data types like strings, integers, floats, lists, and dictionaries.

Complex Structures Not Ideal: Transferring complex data structures (custom classes, objects with nested structures) becomes cumbersome and error-prone due to serialization challenges.

Binary Data Issues: While technically possible, storing binary data (e.g., images) in XCom is not recommended due to potential encoding issues and limitations on data size.

3. Single DAG Run Focus:

Limited Scope: XComPull can only retrieve data from the same DAG run where it was pushed using XComPush.

Inter-Workflow Communication Challenges: XCom is not designed for communication between separate Airflow DAGs. Sharing data across workflows requires alternative approaches (more on that later).

4. Visibility and Monitoring Concerns:

Limited Visibility: By default, XCom data is not readily visible in the Airflow UI.

Monitoring Challenges: Monitoring and debugging XCom data transfers can be cumbersome without additional tools or custom logging mechanisms

XCom implementation

This code demonstrates how Airflow's XCom mechanism facilitates communication between tasks within a DAG. The following example showcases a two-step scrape and upload pipeline:

Task 1: Scraping and Pushing Data

The DAG includes a task responsible for scraping data from a website.

This task utilizes

task_instance.xcom_push()to push the extracted quotes to XCom for sharing with subsequent tasks.

Task 2: Retrieving and Processing Data

Another task within the DAG retrieves the scraped quotes using

kwargs['ti'].xcom_pull(). It specifies the key"scraped_quotes"and the upstream task ID ('scrape_quotes') to ensure it fetches the correct data.Once retrieved, the task proceeds to save the quotes as a CSV file.

def scrape_quotes():

url = 'http://quotes.toscrape.com'

response = requests.get(url)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

quotes = [quote.text.strip() for quote in soup.select('span.text')]

# Store quotes in XCom

task_instance = kwargs['ti']

task_instance.xcom_push(key='scraped_quotes', value=quotes)

return None # Don't directly return quotes

else:

print(f"Failed to fetch quotes. Status code: {response.status_code}")

return []

def save_to_csv(**kwargs):

# Retrieve quotes from XCom

quotes = kwargs['ti'].xcom_pull(key='scraped_quotes', task_ids='scrape_quotes')

if not quotes:

print("No quotes to save.")

return

# Get the directory of the current DAG file

current_dag_directory = os.path.dirname(os.path.abspath(__file__))

# Specify the directory where you want to save the CSV file

output_directory = os.path.join(current_dag_directory, 'output')

# Create the output directory if it doesn't exist

os.makedirs(output_directory, exist_ok=True)

# Create a Pandas DataFrame

df = pd.DataFrame({'Quote': quotes})

# Save to CSV file in the specified output directory

csv_path = os.path.join(output_directory, 'quotes.csv')

df.to_csv(csv_path, index=False)

print(f"Quotes saved to {csv_path}.")

# Set up task dependencies

task_scrape_quotes = PythonOperator(

task_id='scrape_quotes',

python_callable=scrape_quotes,

dag=dag,

)

task_save_to_csv = PythonOperator(

task_id='save_to_csv',

python_callable=save_to_csv,

dag=dag,

)

# Set up task dependencies (already defined correctly)

task_scrape_quotes >> task_save_to_csv

Custom XCom backend

After highlighting the limitations of XCom in the previous section, we now delve into solutions that overcome these constraints. Custom XCom backends offer a flexible alternative to Airflow's default metadata database storage, providing scalability, durability, and enhanced functionality tailored to specific workflow requirements.

Why Use a Custom XCom Backend?

Scalability: If your workflows involve large amounts of XCom data, the metadata database might become a bottleneck. Custom backends can leverage scalable storage solutions like cloud storage.

Durability: Certain custom backends (e.g., object storage) can offer higher durability and redundancy compared to the metadata database.

Security: Implement custom access control mechanisms for XCom data within the chosen backend system.

Specialized Storage: Specific data types (e.g., geospatial data) might benefit from custom backends designed for efficient storage and retrieval.

Custom XCom Backend

Creating a custom XCom backend involves defining a class that inherits from the BaseXCom class. This base class provides the foundation for interacting with Airflow's XCom system and defines core functionalities, including methods for serialization and deserialization.

BaseXCom Class Responsibilities:

Inheritance: It's a base class meant to be subclassed by your custom XCom backend implementation.

Database Integration: It interacts with the Airflow metadata database for tasks like retrieving metadata about XCom entries (e.g., task ID, execution date, key).

Core XCom Functionality: It defines methods for essential XCom operations, including

serialize_value()for converting data into a suitable format anddeserialize_value()for retrieving serialized data.

While the preceding example provides a basic implementation, developers can override serialization steps to tailor XCom backends to application-specific needs.

This flexibility extends to integrating with cloud storage and implementing serialization methods for diverse data types like pandas DataFrames.

Here's a link to additional information on the above.

Custom XCom Backend Example

Below is a simple implementation demonstrating how to create a custom XCom backend. In this example, data is stored in a local file.

Custom XCom Class:

We define a class named

FileXComBackendthat inherits fromBaseXCom.It overrides the

serializeanddeserializemethods to handle data conversion before storing it in the file and vice versa.

Storing and Retrieving Data:

The DAG utilizes this custom backend by setting the

xcom_backendparameter in the DAG definition.Tasks within the DAG interact with XCom as usual using

task_instance.xcom_pushandtask_instance.xcom_pull, but the data is stored and retrieved based on the custom backend's logic.

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from airflow.exceptions import AirflowException

from typing import Any

class FileXComBackend(BaseXCom):

def __init__(self, filepath: str):

self.filepath = filepath

def serialize(self, value: Any) -> str:

# Convert data to a JSON string before storing

import json

return json.dumps(value)

def deserialize(self, data: str) -> Any:

# Load data from JSON string

import json

return json.loads(data)

def write(self, key: str, value: Any, execution_date: datetime, task_id: str) -> None:

serialized_data = self.serialize(value)

with open(self.filepath, 'a') as f:

f.write(f"{key},{execution_date},{task_id},{serialized_data}\n")

def read(self, key: str, execution_date: datetime, task_id: str) -> Any:

with open(self.filepath, 'r') as f:

for line in f:

line_data = line.strip().split(',')

if len(line_data) == 4 and line_data[0] == key and line_data[1] == str(execution_date) and line_data[2] == task_id:

return self.deserialize(line_data[3])

raise AirflowException(f"XCom value not found: key={key}, execution_date={execution_date}, task_id={task_id}")

def delete(self, execution_date: datetime, task_id: str) -> None:

# Implement logic to delete specific XCom entries from the file

pass # Placeholder for deletion logic

# Define the DAG with custom XCom backend

with DAG(dag_id='custom_xcom_example', start_date=datetime(2024, 3, 26)) as dag:

custom_backend = FileXComBackend('/tmp/custom_xcom.log')

# Tasks using custom XCom

task1 = PythonOperator(

task_id='task1',

python_callable=lambda: print("Storing data with custom XCom"),

dag=dag,

xcom_backend=custom_backend,

)

task2 = PythonOperator(

task_id='task2',

python_callable=lambda: print("Retrieving data with custom XCom"),

dag=dag,

xcom_backend=custom_backend,

)

# Set task dependencies

task1 >> task2

Explanation:

This example defines a

FileXcomBackendclass that inherits fromBaseXCom.It uses a local file (

/tmp/custom_xcom.log) for storing XCom data.The

serializeanddeserializemethods handle data conversion to and from JSON format before writing/reading from the file.The DAG sets the

xcom_backendparameter to the custom backend instance for both tasks.

While this serves as a basic implementation of a custom XCom backend, more complex scenarios such as cloud storage integration or specialized data serialization may require additional customization.

For simpler data transfer needs, utilizing intermediate storage offers a more straightforward solution.

Let's explore intermediate storage next.

Intermediate Storage

Intermediate storage refers to a temporary and accessible location where data is stored during an Airflow workflow execution. This data serves as a bridge between tasks, allowing them to access and process large datasets efficiently.

Benefits of Intermediate Storage:

Scalability: Handles large datasets without impacting the Airflow metadata database.

Flexibility: Supports diverse data formats and sizes.

Decoupling: Enables independent task execution, without relying on XCom.

Potential for Reuse: Processed data can be leveraged in subsequent workflows.

Common Intermediate Solutions:

Cloud Storage: Offers scalability and reliability (e.g., S3, GCS).

Local Filesystem: Suitable for development and smaller datasets, albeit with production limitations.

Network Attached Storage (NAS): Provides centralized storage accessible by multiple Airflow workers.

Choosing the Right Solution:

The best solution depends on your specific needs. Consider factors like:

Data Size: Cloud storage is ideal for massive datasets, while local storage might suffice for smaller ones.

Durability: Cloud storage offers high availability and redundancy, while local storage might need backups.

Security: Cloud storage providers offer robust access control mechanisms.

Cost: Cloud storage can incur costs based on usage, while local storage might be free but has resource limitations.

Implementation with Airflow:

Define the storage location, upload data using appropriate libraries (e.g., boto3 for S3), and download data for processing in downstream tasks.

Simple Implementation Example:

def scrape_all_pages():

# code to scrape as before, in the previous demonstrations

if new_reviews:

new_reviews_df = pd.DataFrame({"Review": new_reviews, "Date": new_dates})

# Cloud Storage Setup

client = storage.Client()

bucket_name = "your-bucket-name" # Replace with your bucket name

blob_name = "raw_reviews.csv" # Replace with desired filename

# Upload data to GCS

try:

bucket = client.bucket(bucket_name)

blob = bucket.blob(blob_name)

blob.upload_from_string(new_reviews_df.to_csv(index=False), content_type="text/csv")

logging.info("New reviews uploaded to Google Cloud Storage")

except Exception as e:

logging.error(f"Error occurred while uploading to GCS: {str(e)}")

def process_data():

# Cloud Storage Setup

client = storage.Client()

bucket_name = "your-bucket-name" # Replace with your bucket name

blob_name = "raw_reviews.csv" # Replace with desired filename

# Download data from GCS

try:

bucket = client.bucket(bucket_name)

blob = bucket.blob(blob_name)

data = blob.download_as_string()

reviews_df = pd.read_csv(data, index_col=0)

logging.info("Reviews data downloaded from Google Cloud Storage")

# Perform some processing on the data (example: print number of reviews)

print(f"Total number of reviews: {len(reviews_df)}")

except Exception as e:

logging.error(f"Error occurred while downloading from GCS: {str(e)}")

Explanation:

scrape_and_store_dag.py remains the same, scraping reviews and uploading new data to GCS.

process_data_dag.py defines a new DAG for processing the stored data:

It downloads the data from GCS using the same bucket and blob configurations.

It reads the downloaded data as a CSV DataFrame.

Custom XCom VS Intermediate Data Storage

Data Granularity: Intermediate storage typically stores entire files or datasets, whereas XCom allows for finer-grained data exchange between tasks. If you need to pass small pieces of data or metadata between tasks, XCom might be more suitable.

Task Dependency Management: XCom is tightly integrated with task dependencies in Airflow, making it easy to pass data between dependent tasks within a DAG. If your workflow requires passing data between tasks within the same DAG, XCom can provide a more streamlined solution compared to intermediate storage.

Real-time Data Sharing: XCom allows for real-time data sharing between tasks as they execute, whereas intermediate storage may introduce latency due to the need to write and read data to and from storage. If your workflow requires immediate access to shared data during task execution, XCom may be preferable.

Integration with Airflow Ecosystem: XCom is a built-in feature of Apache Airflow, making it easy to use and integrate with other Airflow components. If you're already using Airflow for workflow orchestration, leveraging XCom for data exchange can simplify your architecture and reduce dependencies on external systems.

Simplicity and Convenience: For workflows where passing small amounts of data between tasks is sufficient, using XCom can be simpler and more convenient than setting up and managing intermediate storage systems.

However, there are also scenarios where intermediate storage might be more appropriate:

Large Data Volumes: If your workflow involves handling extremely large volumes of data that exceed the practical limits of XCom or Airflow's metadata database, intermediate storage may be a better option for storing and managing this data.

Complex Data Types: If your workflow deals with complex data types (e.g., large binary objects, structured datasets) that are not easily serialized and deserialized using XCom, intermediate storage solutions may offer more flexibility and performance.

External System Integration: If your workflow needs to exchange data with external systems or services that are not directly integrated with Airflow, intermediate storage can serve as a neutral data exchange layer that facilitates interoperability between Airflow and external systems.

In conclusion, choosing the right solution is dependent on your application. Equipped with this knowledge, you are surely on your way to making better decisions tailored to your requirements.